This page presents a brief summary of some of the research and projects I have done to date, starting with newer work at the top.

Leveraging Adversarial training for Monocular Depth Estimation

This page presents a brief summary of some of the research and projects I have done to date, starting with newer work at the top.

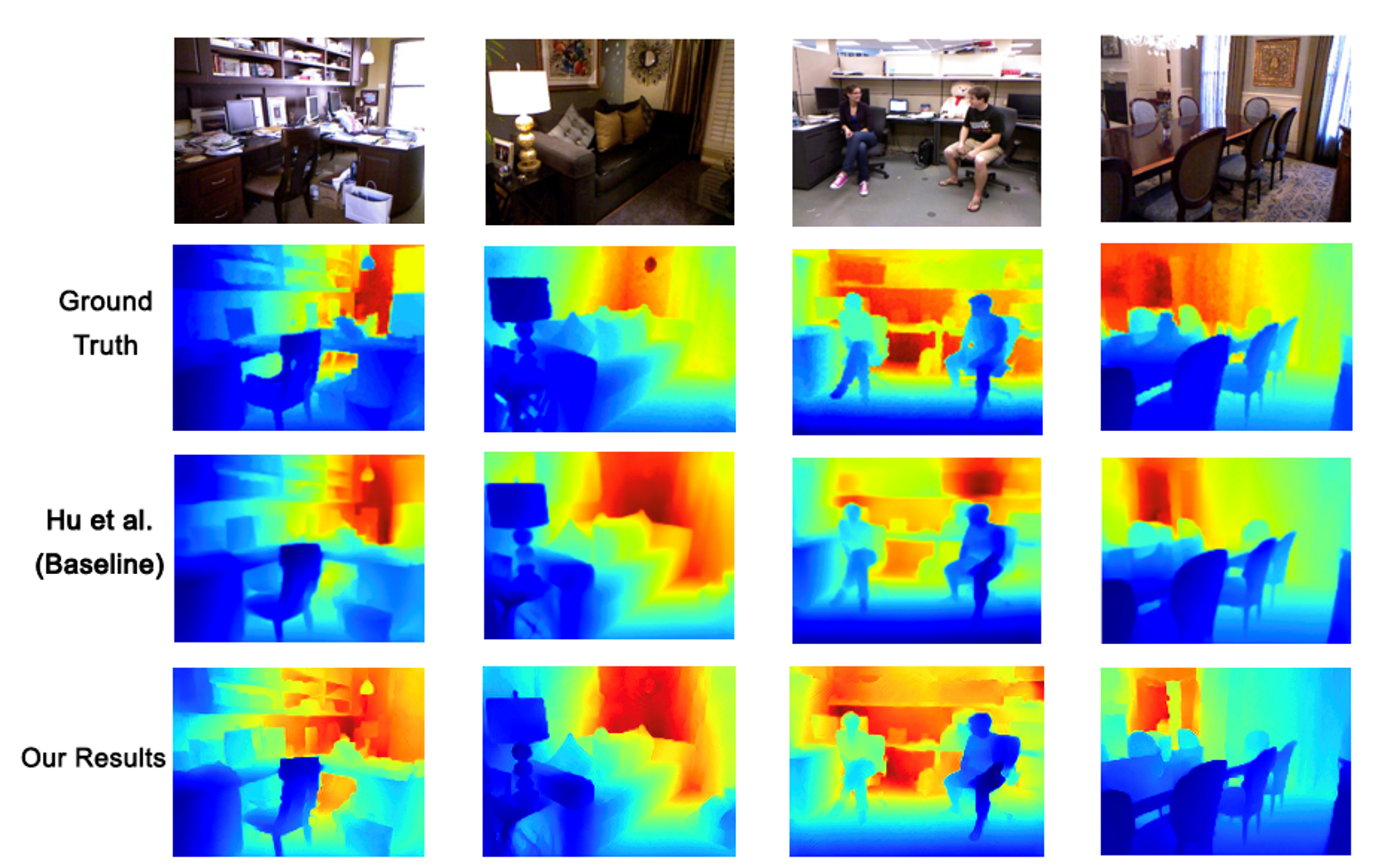

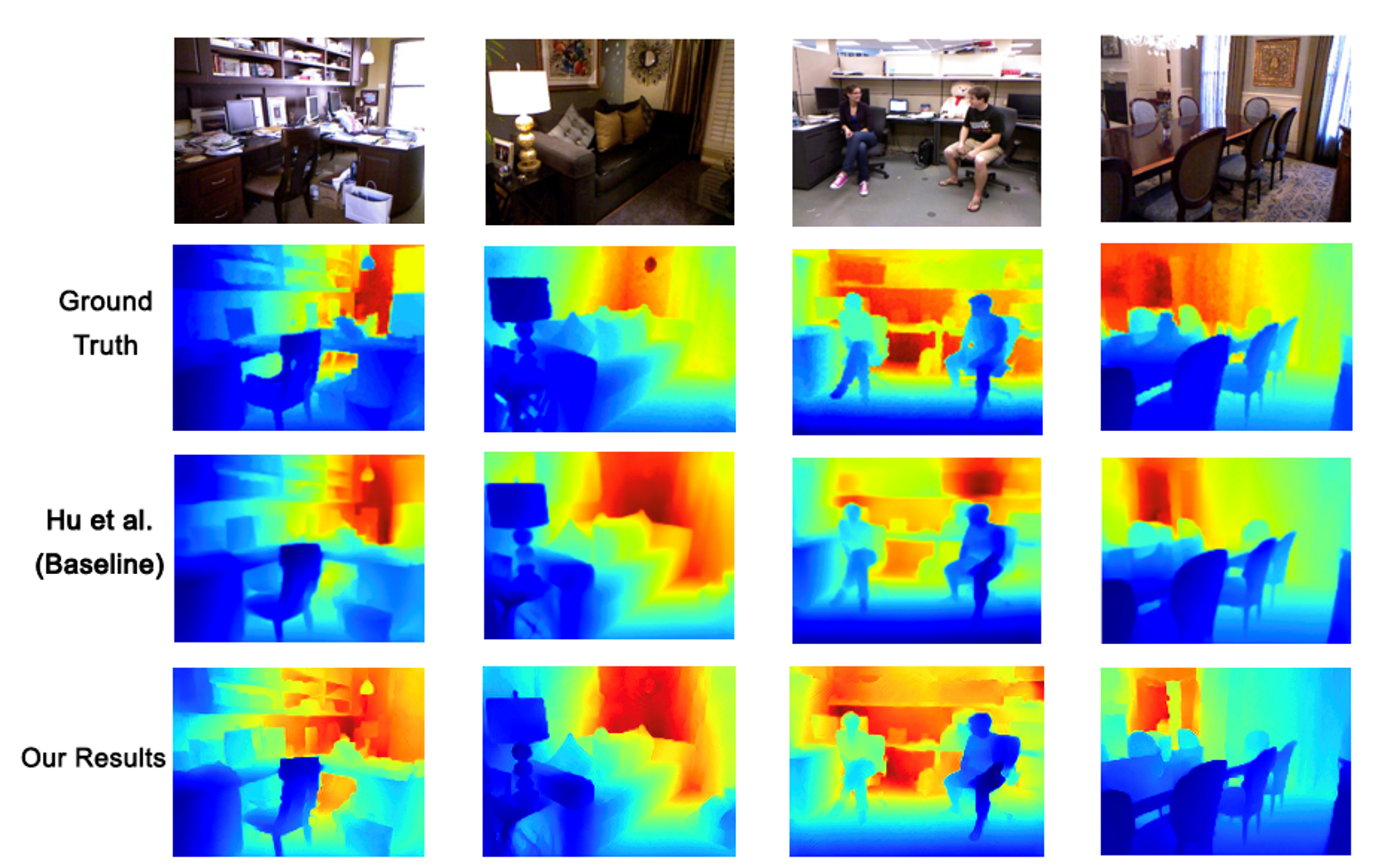

In this project, we tackle the problem of estimating the depth of a scene given a single 2D RGB image. We propose a generative adversarial approach to extend the recent work done by Hu et al. [baseline]. A discriminator network is added to their proposed architecture which is fed with ground truth depth maps as well as the depth maps estimated by the generator network. The discriminator network learns to differentiate between the real depth maps and artificial depth maps that are generated by the generator. We also utilize the structural similarity between the generated depth maps and ground truth as a loss term for depth estimation training. We then evaluate our proposed approach on the NYU v2. dataset. The experimental results show that when adversarial training is used, the performance of the existing method is improved.

In this project an advanced driver assistant system is designed and implemented in order to prevent an autonomous car, its passengers and the pedestrians moving in the proximity of the car from possible dangers and control safety of them in higher levels. This task is done through a computer vision system using a monocular camera, by warning the driver in the case of probability of collision with near cars or pedestrians. This system uses the input images from camera to perform two separate tasks; detecting cars and pedestrians in the field of view and estimating the depth of the image in order to extract distance with the detected objects and using these information in the case of a probable collision gives warning signal to the driver.

This research is focused on validating the correlation between corresponding ECOG, EEG and fMRI data. The data are collected from epilepsy patients and we will design a task for showing same active regions in the brain by Blood-oxygen-level dependent and related signals. This research is supported by Royan institute under supervision of Dr. Khaligh-Razavi.

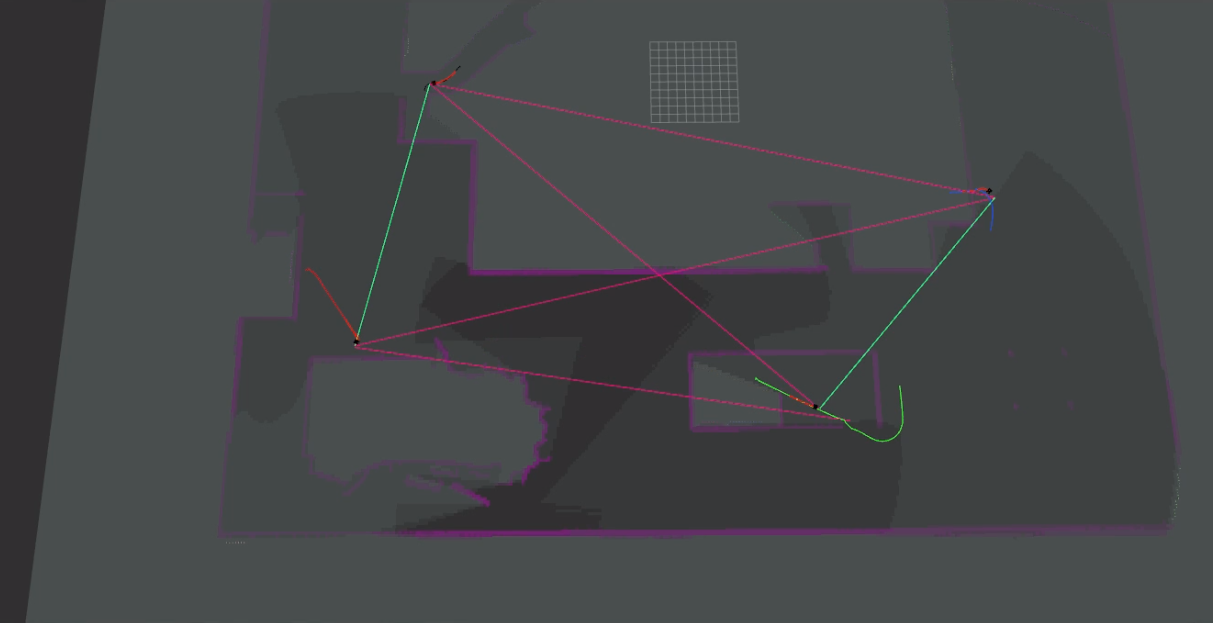

Goal of this project is an experimental study on the role of communication models in multirobot exploration algorithms.

for this purpose a wireless communication simulator implemented that interacts both with the ROS nodes of the robots and with a simulator(Gazebo), in order to receive requests and uses various models of signal propagation to decide if two robots can communicate or not.

this project is cunducted under the supervision of Prof. F.Amigoni during my internship at AIRLab of Department of Electronics, Information and Bioengineering of Polytechnic University of Milan.

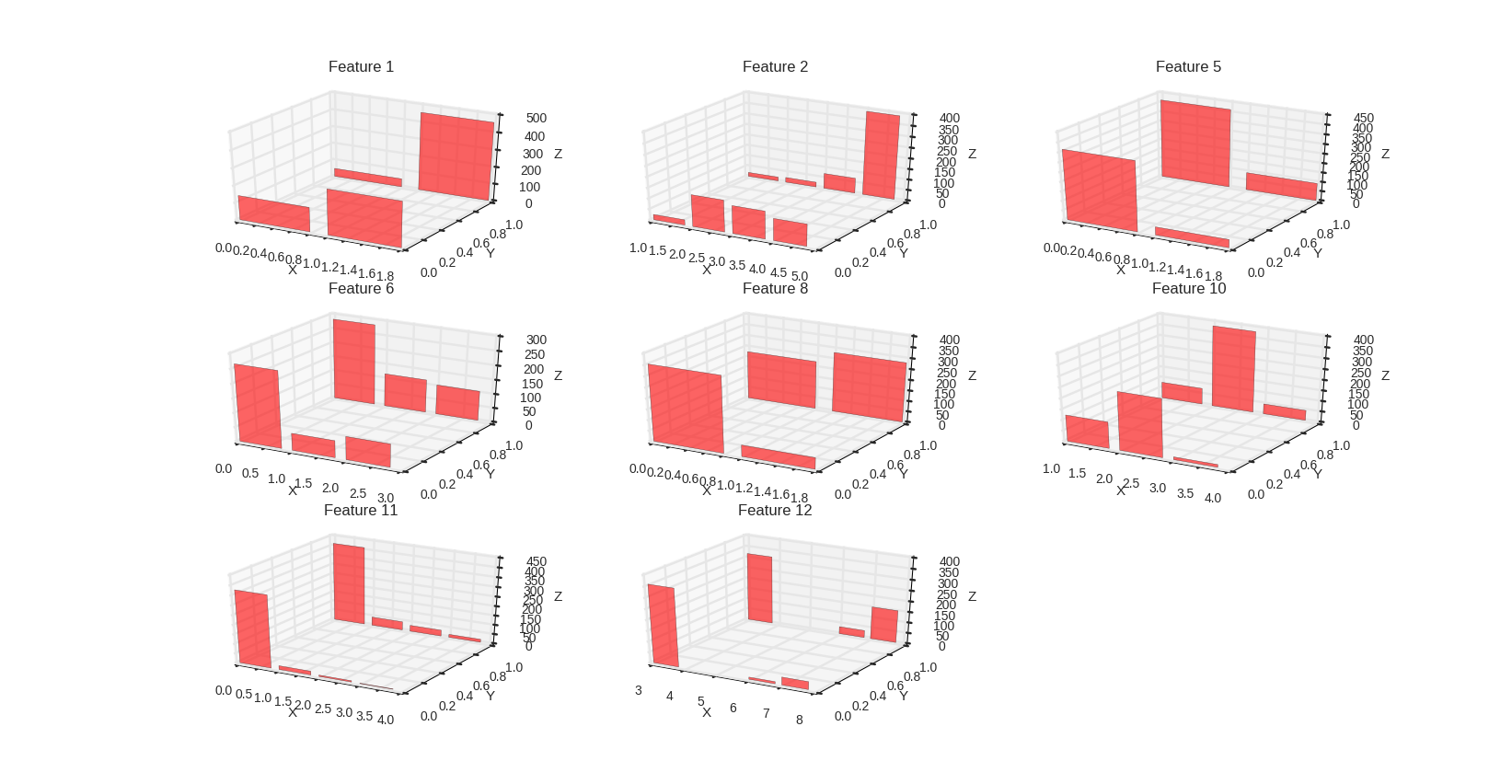

In this project, we implemented naive bayes model on a data set of heart disease.(UCI Heart Disease Data). Pre processing operations like discretizing continuous values and filling missing values were done. 2 probabilistic graphical model and 3 bayesian network using different subsets of data were designed and evaluated.

Codes are available on [github] [Technical Report(Persian)]

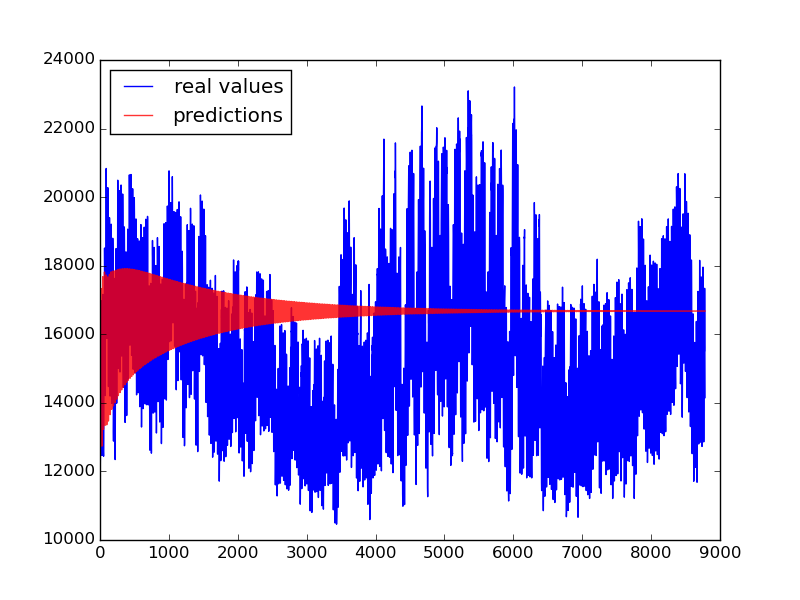

The analysis of experimental data that have been observed at different points in time leads to new and unique problems in statistical modeling and inference. In this project, our goal was to predict the power usage of Ontario state, Canada, using recorded usage data from January 2002 to December 2016. We implemented parametric and non-parametric models and compared them with respect to various evaluation metrics. Second project of Statistical Machine Learning course.

Codes are available on [github] [Technical Report(English)] [Technical Report(Persian)]

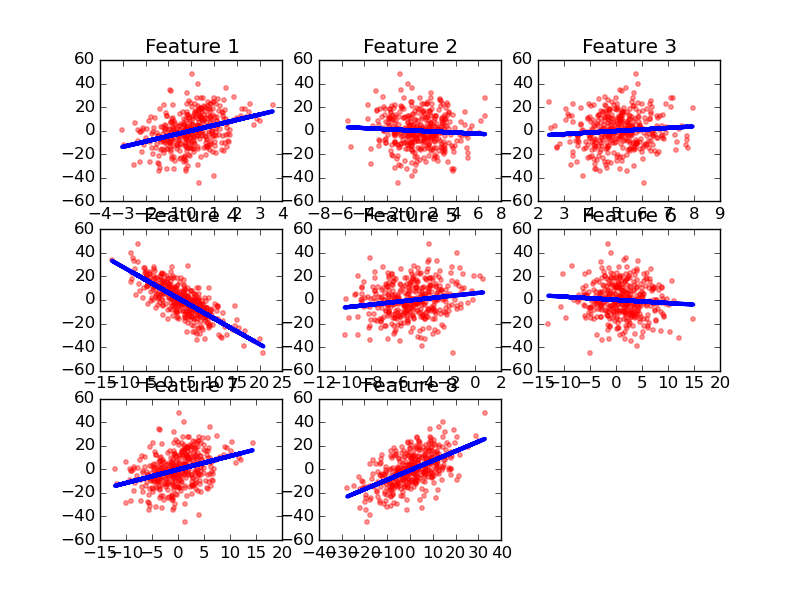

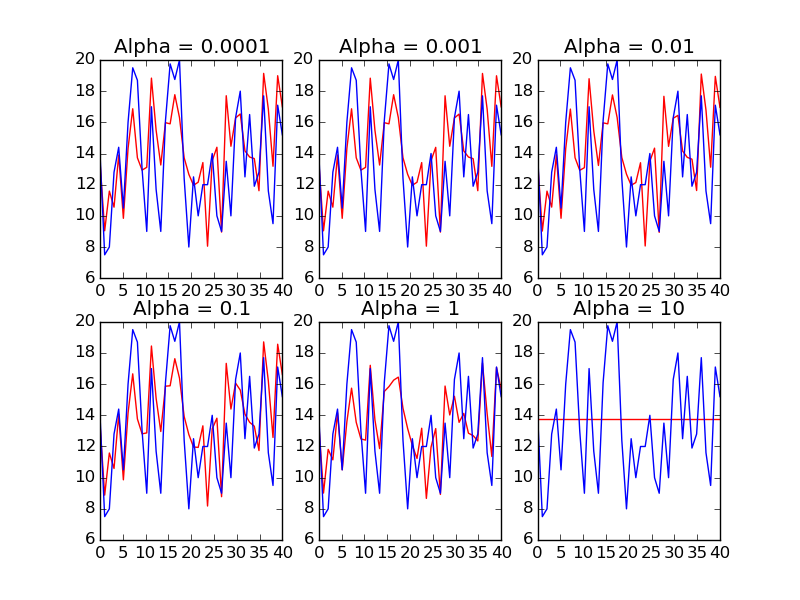

Implementation of a Simple Linear Regression and Lasso on 2 different datasets. Various evaluation metrics like RSS, R2, AIC and LOOCV were calculated and analyzed, on both train and test sets. Backward and Forward methods of model selection were examined and results were compared as first project of Statistical Machine Learning course.

Codes are available on [github] [Technical Report(Persian)]

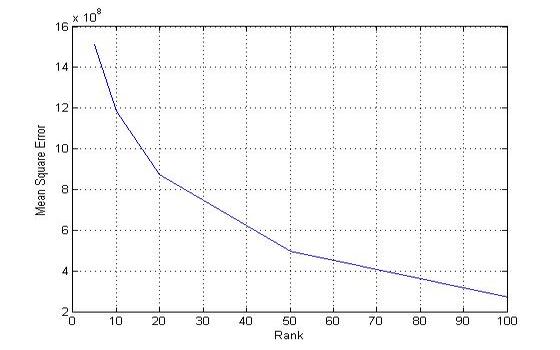

This is implementation of a face recognition system, Descriebd in the paper "Facial Recognition with Singular Value Decomposition". The approach of this paper is to apply the concepts of vector space and subspace to face recognition. The set of known faces with m × n pixels forms a subspace, called “face space”, of the “image space” containing all images with m × n pixels. This face space best defines the variation of the known faces. The basis of the face space is defined by the singularvectors of the set of known faces. The projection of a new image onto this face space is then compared to the available projections of known faces to identify the person. Since the dimension of face subspace is much less than the whole image space, it is much easier to compare projections than origin images pixel by pixel.

One of the Engineering Mathematics course projects, implemented in matlab.

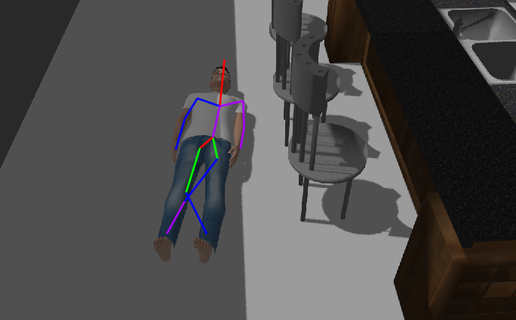

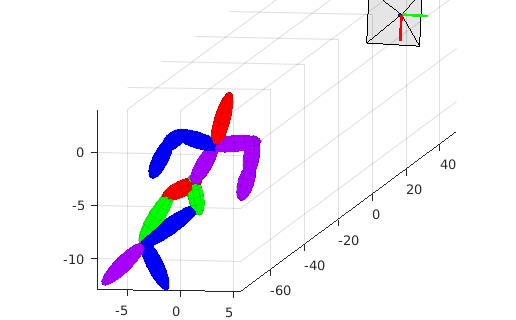

2 phase Victim Detection and 3D pose estimation system implemented for a rescue robot.

- In the first stage an Object Detection method used to detect the lying-down human bodies(victims).

- In second stage a CNN algorithm is used to find body joints information then this joints are used to reconstruct a 3D model of human body and camera pose by applying matching pursuit algorithm in order to estimate the sparse representation of 3D pose and the relative camera from only 2D image evidence.

Alpha version of Codes and demos are avilable here[github]

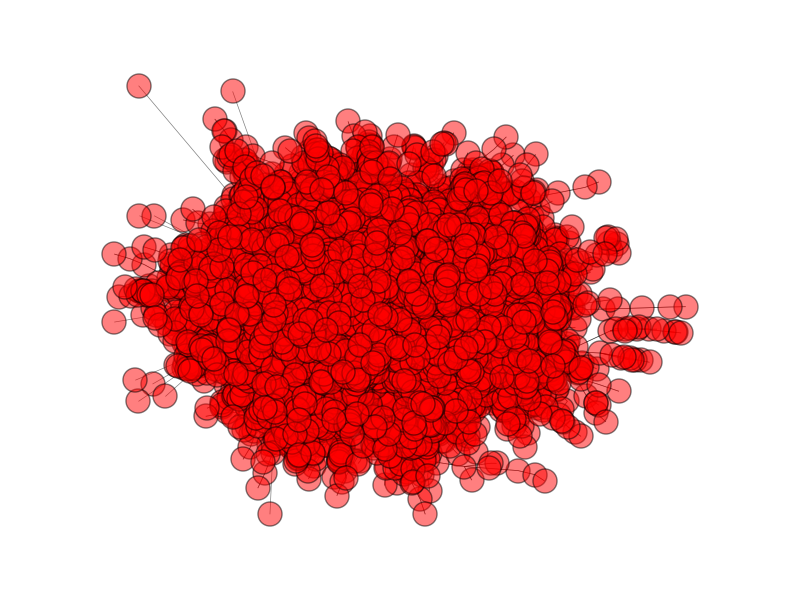

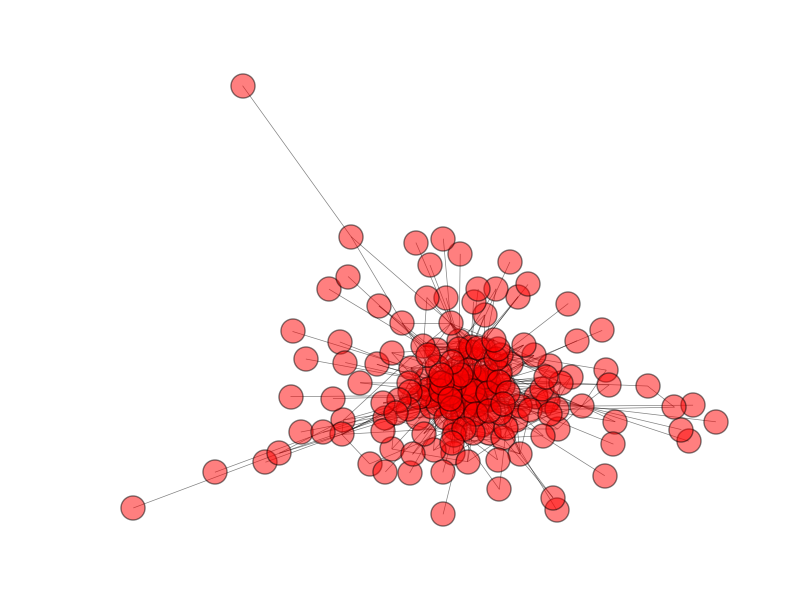

In this project we used Louvain Method implementation from pyhton NetworkX library for community detection in social networks. Modularity is a scale value between -1 and 1 that measures the density of edges inside communities to edges outside communities. Optimizing this value theoretically results in the best possible grouping of the nodes of a given network. This method is a greedy optimization method that appears to run in time O(n log n).

Report and Codes are available on:

[github] [Technical Report(Persian)]

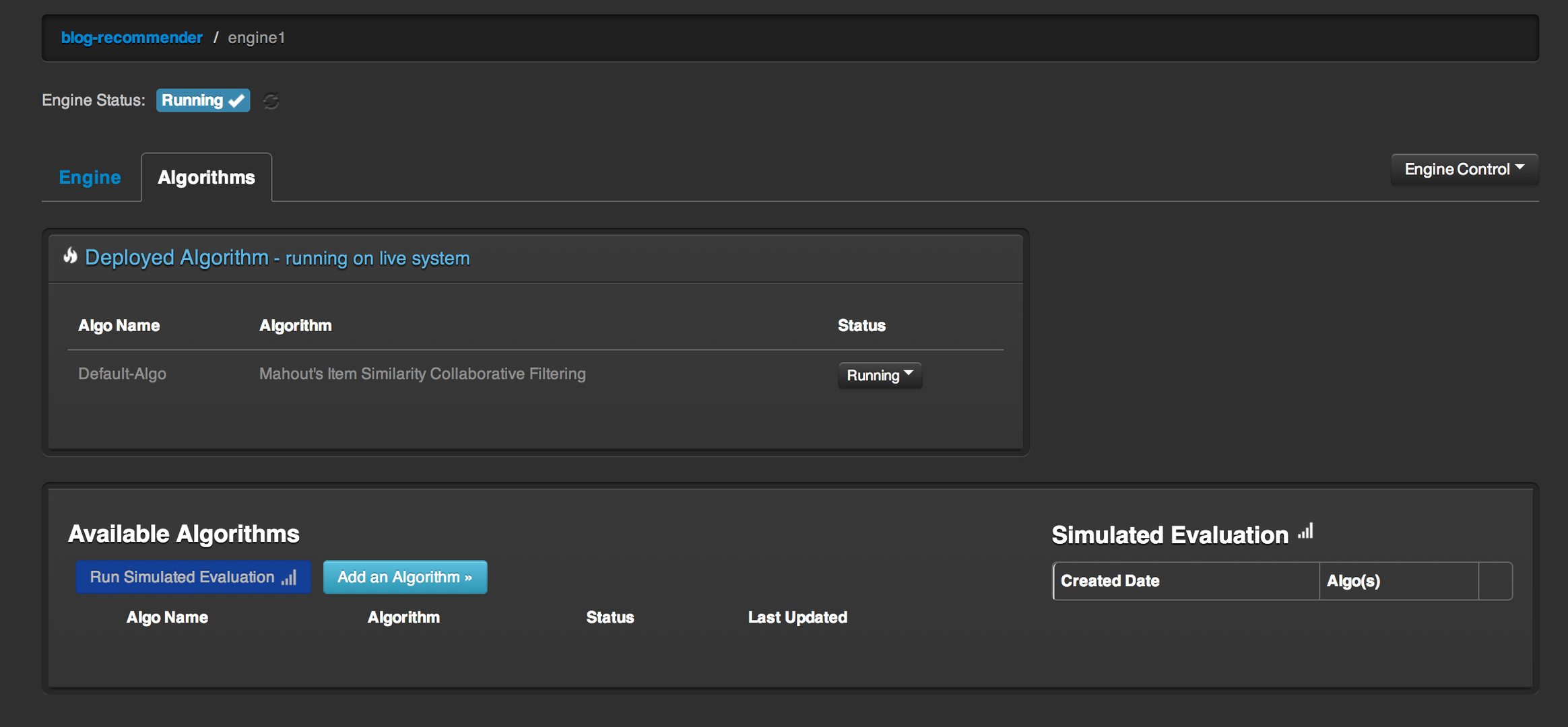

A Shopping Recommeder System implemented using Apache PredictionIO and PHP Laravel as Back-Edn and Android as Client by "Havij Team" in the Sharif Mobile Programing Challenge 2016. Resulted in achieving 9th place out of 60 participating teams.

Apache PredictionIO is an open source machine learning framework for developers, data scientists, and end users. It supports event collection, deployment of algorithms, evaluation, querying predictive results via REST APIs. It is based on scalable open source services like Hadoop, HBase (and other DBs), Elasticsearch, Spark and implements what is called a Lambda Architecture.

We all know that natural disasters and accidents take a huge toll on us and the people we love. Although we cannot prevent these kind of accidents (Atleast not yet), we can use our tools and technology to aid us in these situations.

This is our contribution on Robot Rescue Simulation platform as a base code for starters to develop new high-level algorithms faster and without wasting time on low-level implementation of base structures.

That’s what we are trying to achieve here specifically by using robots. Our main goal is to develop basic modules that can later be used in a bigger framework and interact with each other.

The team description paper briefly explains these modules and the framework that we are prototyping to optimize them with respect to the tasks in the challenge. Therefore, as discussed in the “future of robot rescue simulation” workshop, our team has designed a new structure of ros framework and Gazebo simulation environment that we’re going to explain its details in the following. We the ”S.O.S VR” are participating in RoboCup Rescue Virtual Robot for the first time, yet our team has already been the winner of agent simulation league in two tournaments.

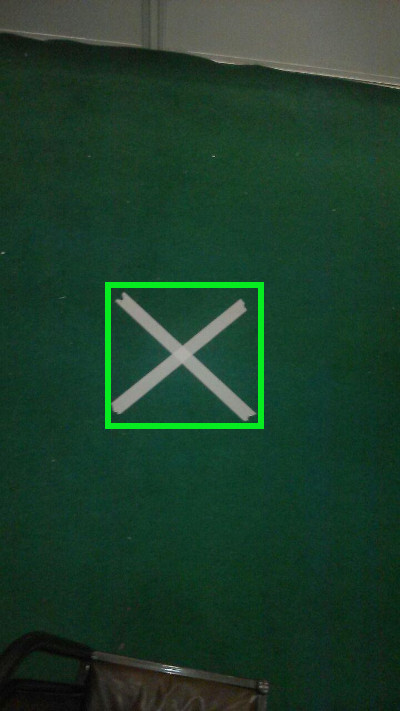

Template matching is a technique used to find a smaller image in a larger one. It works by sliding the small image accross the big one and calculates which part of the bigger image is most likely to be the small image. This algorithm always returns a value, unlike Haar cascades which is returns a position only if it finds a exact match. Template matching uses a template (picture of desired shape in this problem) which is shifted horizontal and vertical over the whole test image. But as the yaw of the taget is not known (build in first round) the template matching does not give good results. Therefore I implemented a turning algorithm of the template image. The matching is done with 1° offset each round until 30°. The highest score of each round is saved and compared to all rounds. The highest score is the best matched target with it's corresponding angle.

14taher@gmail.com

Curriculum Vitae